Delphi Technique: Predicting Emerging Opportunities and Challenges in Renewable Energy

4 | Administering the Delphi Feedback Process

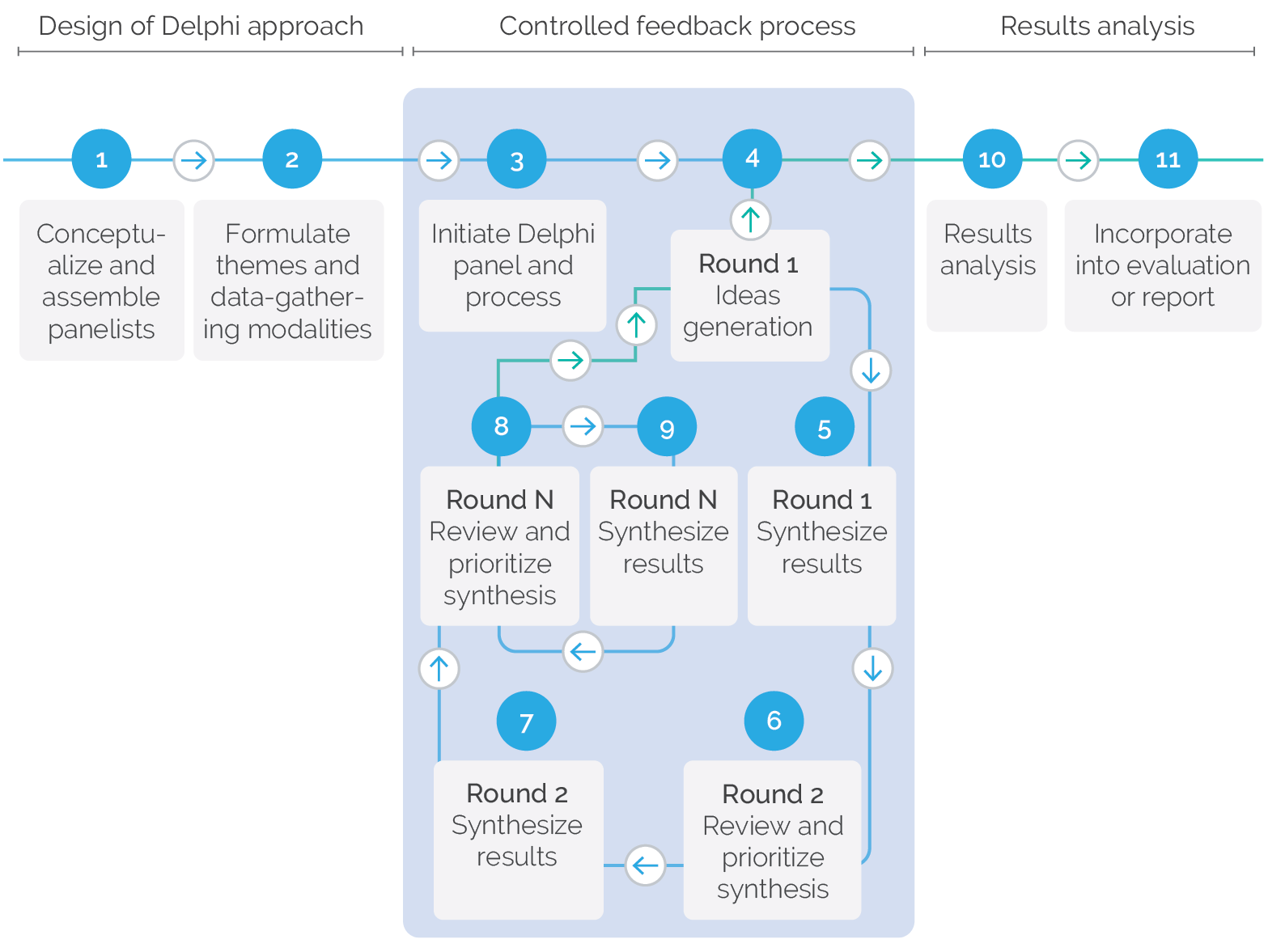

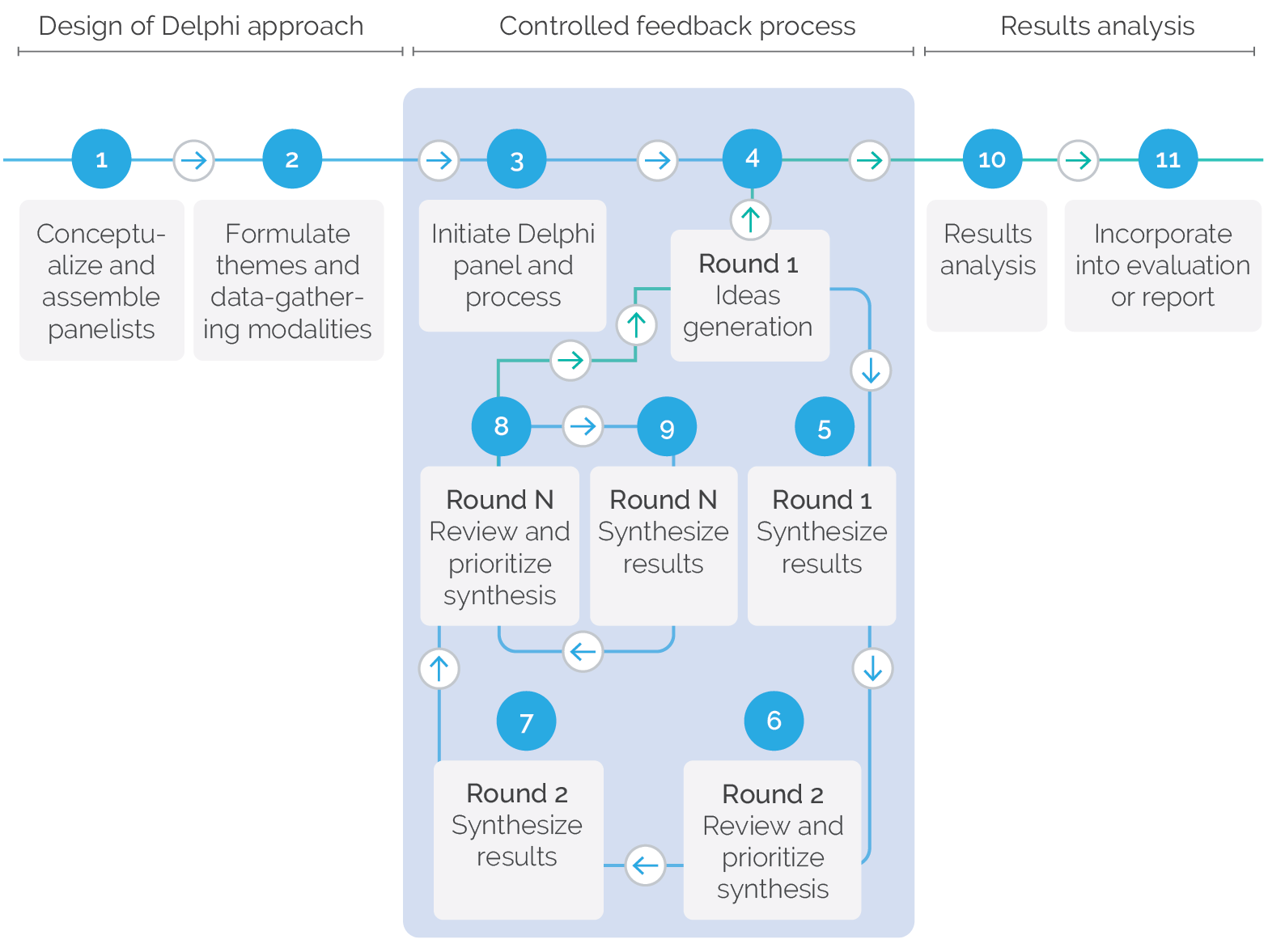

The objective of the second stage is to tightly administer the controlled feedback process to ensure that there is structure and order to the expert opinions solicited through the Delphi technique. Adequately administering this stage is vital for maintaining the integrity of findings and working toward convergence in opinions. Areas covered in this chapter are shaded blue in figure 4.1.

Figure 4.1. The Delphi Process—Feedback Stage

Source: Independent Evaluation Group.

In the RE evaluation, a virtual meeting via videoconferencing proved to be more conducive, given the different international locations of the panelists. IEG initiated the meeting with a presentation that introduced the Delphi process to the panelists, explaining the context in which the results will be used in the RE evaluation. The presentation defined their roles as global experts in RE and clarified what was expected from the panel. The panelists were informed of the key questions they were expected to answer about scaling up RE in developing countries and the Bank Group’s readiness to support its clients with related endeavors. Some publicly available data on global RE markets were also distributed to ensure panelists’ shared understanding of the facts. It is important to note that panelists were informed that they were not expected to carry out any additional research unless they wanted to of their own accord. Instead, it was stressed that IEG’s interest in the Delphi exercise was to take advantage of their existing RE knowledge and expertise related to achieving the clean energy transition.

The virtual kick-off meeting also provided an opportunity to clarify the Delphi panel’s feedback process. A step-by-step demonstration of the Microsoft Word questionnaire template familiarized panelists with the format for providing their feedback in the first round. They were requested to complete the template individually and confidentially; it was confirmed that any data IEG shared with the panel in later rounds would also be confidential and aggregated without attribution to specific individuals. IEG also informed the panel of an email account that was established specifically for corresponding with the global expert panel on RE, which was the primary means of communications and where completed templates were to be submitted. Panelists’ other questions were also answered during the meeting. Additionally, the panel was given information for contacting the IEG team leader and other team members administering the Delphi process should they have questions or concerns during the feedback process.

Kicking Off the Delphi Process

It is important to ensure that all panelists clearly understand the purpose of their participation in the Delphi, the process and the time commitment required, and the modalities through which they will interact with those administering the process (figure 4.1, 3). Typically, this can be accomplished through a face-to-face or virtual meeting where they can ask questions and seek clarification. It is important to share with them the overall schedule and indicate the estimated required effort and time, including contingencies, since the exact number of required rounds or iterations can be uncertain. It is also important to stress the anonymity and confidentiality of the panel’s feedback or data. After the kick-off meeting, the round 1 questionnaires or templates can be shared, initiating the feedback process.

An important understanding reached during the virtual kick-off meeting was the time frame for implementing the Delphi process. The schedule included a planned two iterative rounds and a contingent plan for an optional third round and a wrap-up meeting, as necessary. The IEG team recognized that there should be sufficient time to complete the tasks at each stage but that the process should not drag on unduly, especially considering the demanding schedules of a prominent group of global experts. For the same reason, both IEG and the panelists needed to adhere to the agreed schedule. The following schedule was agreed for the first two rounds, to be completed within a month:

- Distribution of round 1 response templates to panelists by IEG—immediately after virtual kick-off meeting.

- Completed round 1 response templates submitted by panelists to IEG—12 days after kick-off meeting.

- Synthesis of round 1 responses and issuance of round 2 templates with synthesized information to panelists by IEG—7 days after receipt of round 1 response templates.

- Completed round 2 response templates, including rescoring priorities, submitted by panelists to IEG—7 days after issuance of round 2 template with synthesized information.

The panelists were also informed of contingent steps (a possible third round of feedback and wrap-up meeting) in the feedback process should the need arise, although a specific time frame for these activities was not established at kick-off.

Round 1—Idea Generation

The next step is for the panelist to proceed individually to complete the round 1 questionnaire or template (figure 4.1, 4). This first round asks open-ended questions intended to generate ideas from the experts. The panelists include their responses in the template and submit them to the administrators for further action.

In the RE evaluation, immediately after the virtual kick-off meeting the panelist received by email two questionnaire templates—one for opportunities and one for challenges facing the scale-up of RE. Examples of the blank templates that were shared with the panel in round 1, which followed the structure illustrated in figure 3.2, are provided in appendix A. Although the template provided structure for data input, it was not populated with any data, providing panelists with an open-ended opportunity to freely express their individual predictions (capped at 10 priority challenges and opportunities). The panelists were also asked to prioritize their responses by allocating 100 points among them, with more points indicating an item of higher importance. As the panelists separately prepared their feedback, the IEG team communicated with them to maintain the agreed schedule. All eight panelists submitted a completed set of questionnaires. Overall, the round 1 submission of responses proceeded smoothly and concluded successfully.

Synthesis of Round 1 Results

The administrators of the Delphi process now play the crucial role of synthesizing the various expert responses, grouping them if feasible (since multiple experts can have similar opinions; figure 4.1, 5). Any initial prioritization can also be assessed, and aggregated scores could be shared for subsequent rounds. Subject matter expertise within the team administering the Delphi process is vital at this stage to decipher responses and synthesize the information into a cohesive set reflecting the views of the overall panel. Once the synthesis is completed, a revised template is prepared. The revised template follows a similar structure, but unlike the initial one that was blank for open-ended responses, it is now populated with the synthesized responses of the panelists without individual attribution (they will see the ideas of others but not know whose ideas they are). The aim is to share with the panel the synthesized results to inform the panel of the broader suite of responses by other panelists, which may influence any subsequent feedback and (re)prioritization. The anonymity of individuals is important, so that panelists are influenced by the ideas rather than the expert who may have originated them.

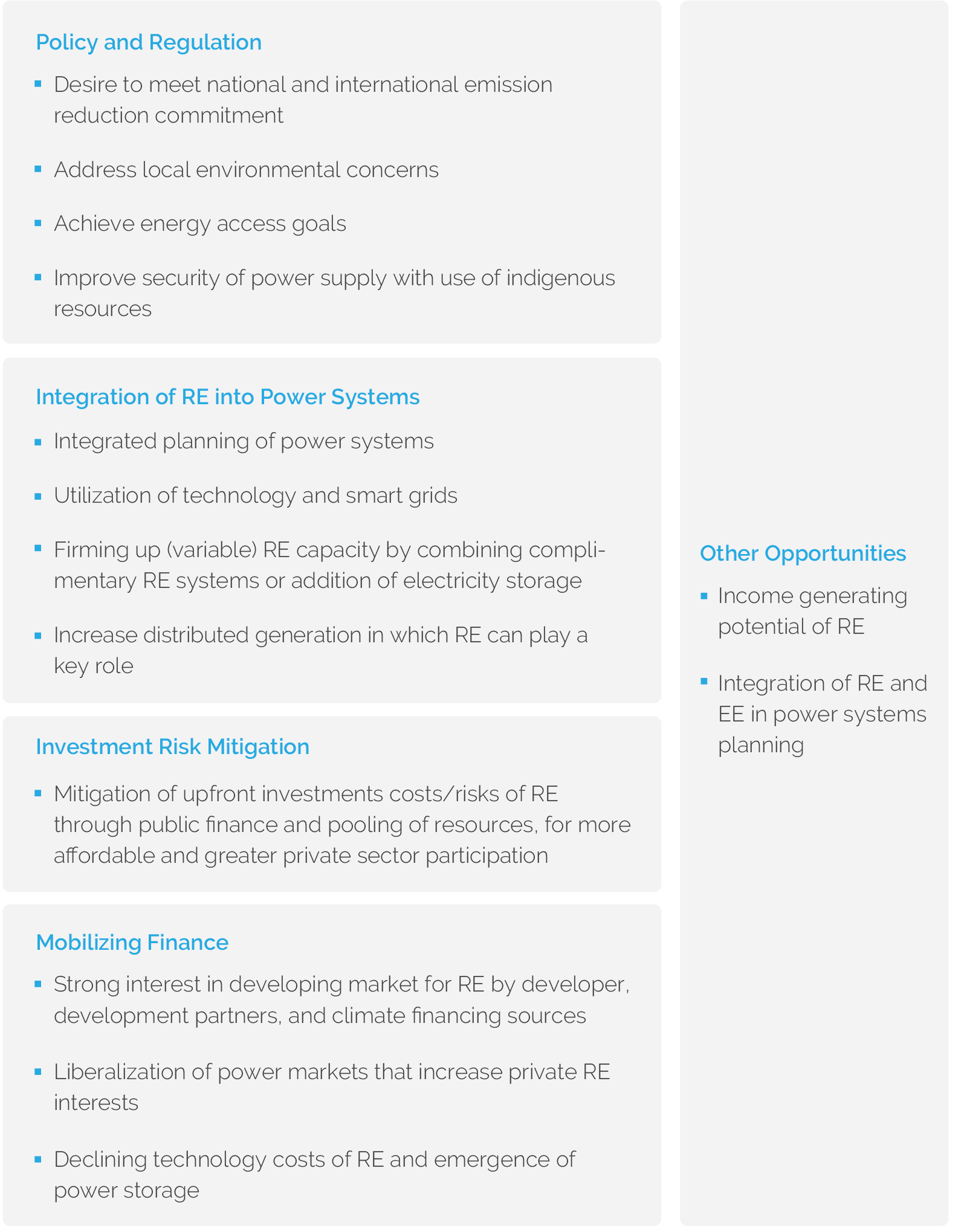

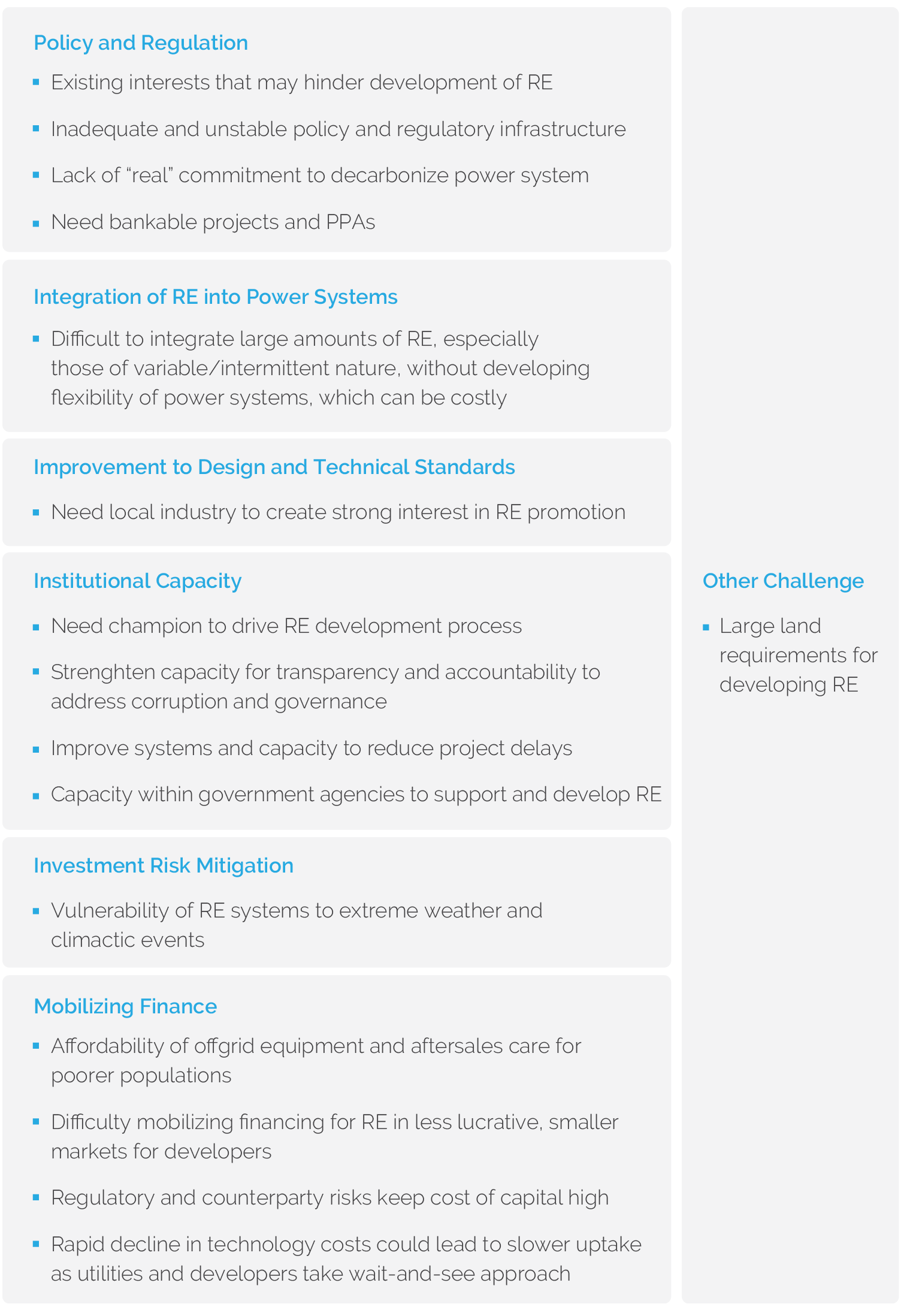

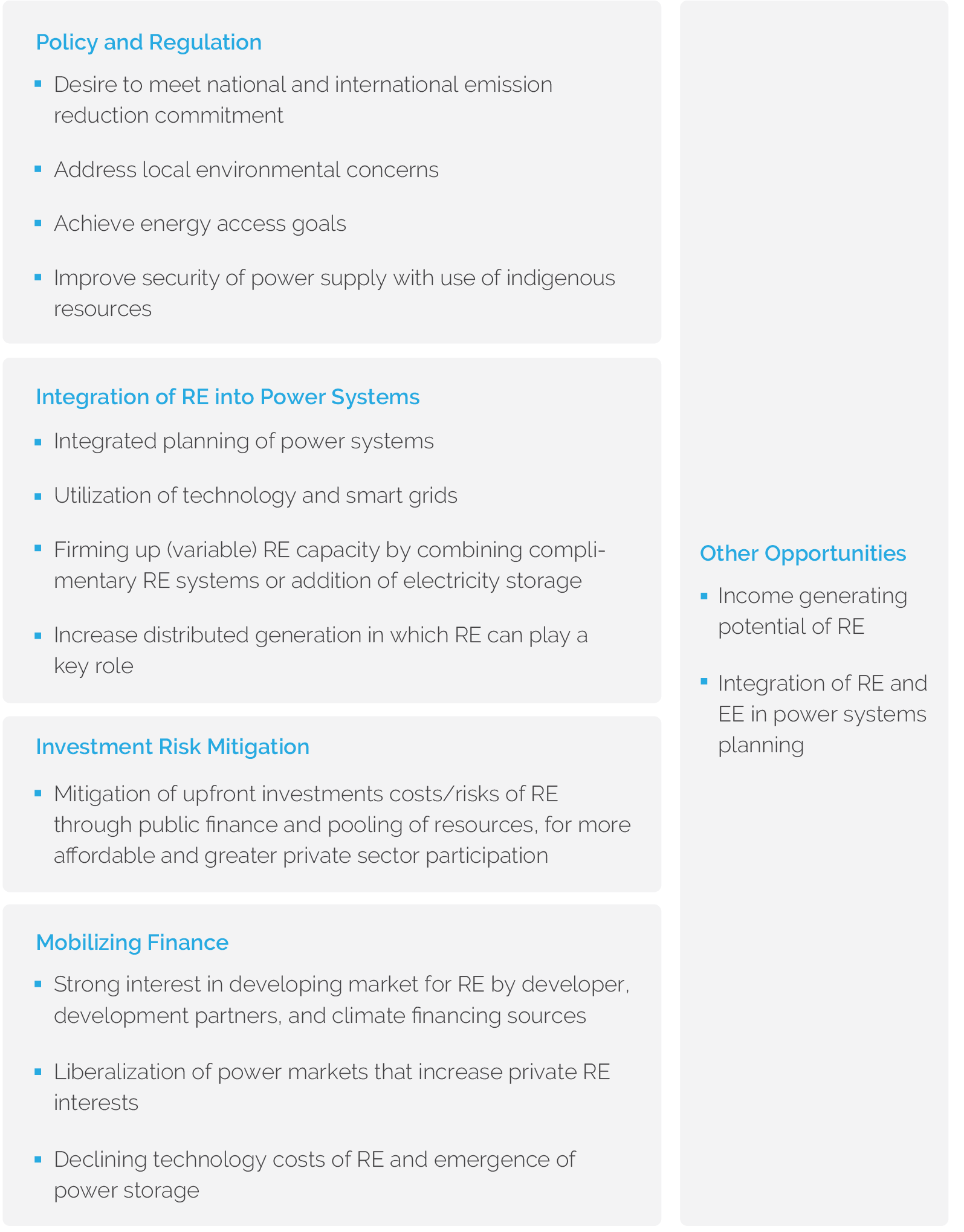

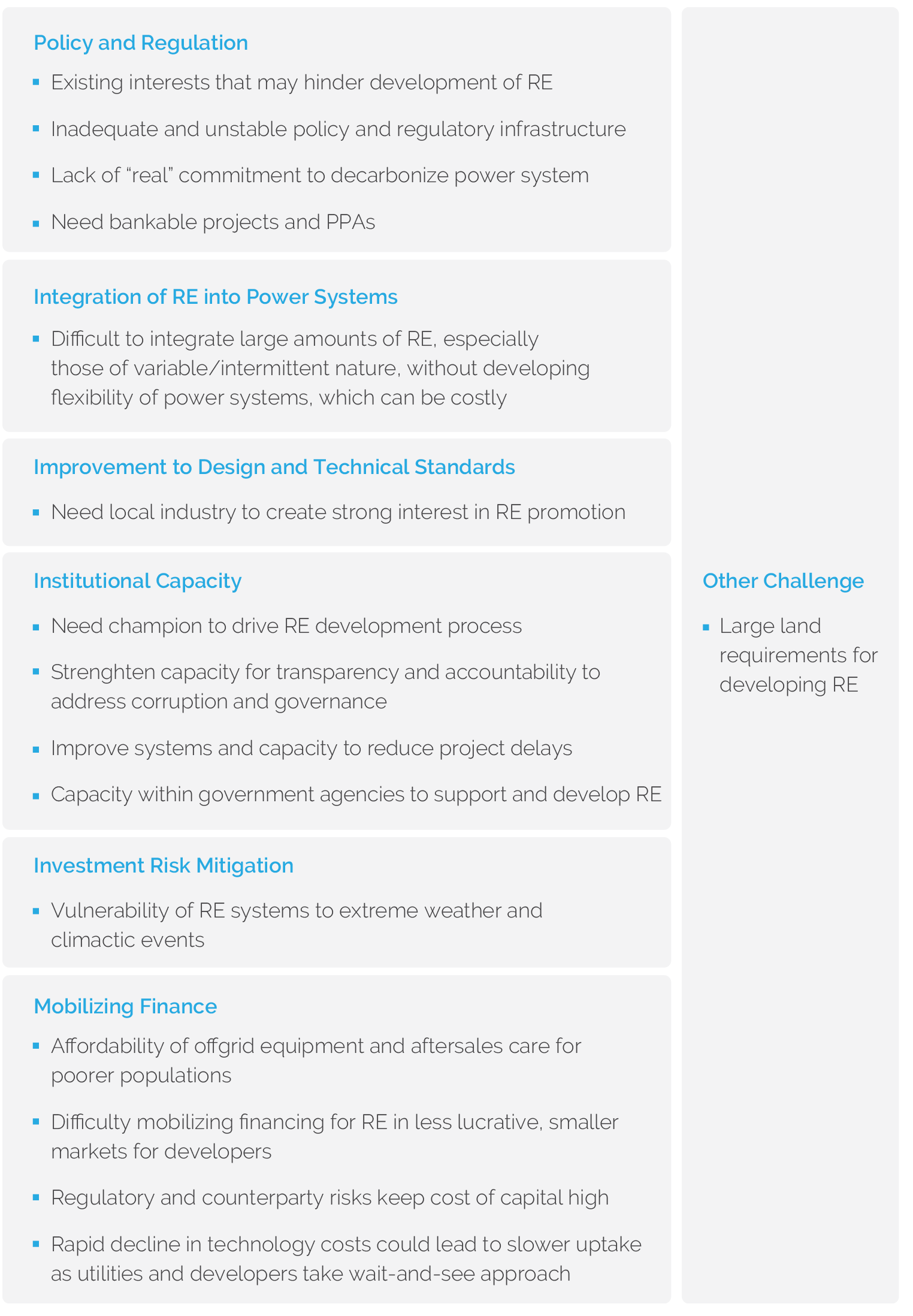

In the RE evaluation, the team prepared the basic structure of the round 2 template before receiving the completed round 1 questionnaires, aware that the schedule allowed only seven days to complete the synthesis. Two team members were designated to synthesize the feedback—one subject matter specialist in RE with experience leading similar focus groups and one from IEG’s methods team who was familiar with the Delphi technique. Once the templates were received, the first challenge was to group multiple responses in which similar opportunities or challenges were highlighted, and then categorize them into broad areas of reforms. In this case, most responses (27) were grouped according to barriers that were identified in the ToC described in box 3.1, and a few outliers (3) were grouped separately. Round 1 resulted in 14 opportunities and 16 challenges identified by the panel, as indicated in figure 4.2.

The emerging opportunities put forward by the panel for Round 1, grouped by reform areas or barriers

Figure 4.2. Delphi Panel–Generated Ideas for RE—Round 1

The emerging challenges put forward by the panel for Round 1, grouped by reform areas or barriers

Source: Independent Evaluation Group.

Note: EE= energy efficiency; RE = renewable energy.

Soliciting Round 2 Responses

Once the panelists receive the synthesized results, they are requested to reprioritize the set of aggregated responses from round 1, by selecting either their own response or ones identified by others (figure 4.1, 6). A scoring system is typically applied.

In the RE evaluation, the panelists were presented with two round 2 templates—one for opportunities and another for challenges, for scaling up RE. However, unlike in round 1, these templates were populated with the synthesized results reflecting the combined views of the entire panel without attribution to any specific panelist. Although the round 2 templates shared round 1 input from all panelists, panelists were requested to rescore the 14 opportunities and 16 challenges. This is an important aspect of the iterative feedback process in a Delphi technique, where each panelist can now reprioritize with the benefit of input from other experts, potentially being influenced by them. The panelists rescored by allocating 100 points across each of the 14 opportunities and another 100 points across the 16 challenges; more points indicated a higher priority. The panelists were also asked to score the same opportunities and challenges using a five-point Likert scale, and to likewise score the corresponding actions or solutions, which were also prepopulated based on the round 1 synthesis. Similar Likert scale ratings were requested for the Bank Group’s readiness to support clients with specific reforms (actions or solutions), reflected as its position of influence and capacity. The prepopulated templates and scoring scales can be accessed in a supplemental annex on the IEG website.

Since there was already significant convergence in round 1 among panelists, the IEG team saw an opportunity to explore some key emerging issues with the experts in greater depth. Therefore, round 2 included a separate supplemental questionnaire seeking the panel’s responses to a couple of specific RE-related questions. The questions were as follows; panelists were free to respond in essay format:

- Given the trade-off between (i) decreasing costs of RE technologies, particularly wind and solar; and (ii) increasing costs on power systems to ensure adequate flexibility for a smooth integration of variable or intermittent RE generation sources,

- How can developing countries manage this trade-off?

- What are the prospects for availability of economical electricity storage solutions (for example, thermal, batteries), and how will this affect the trade-off indicated above?

- If the Paris Climate Agreement and its emission reduction commitments are a significant opportunity that can support the development of RE, how important is the mobilization of the funds committed in the Agreement by developed countries (that is, $100 billion per year by 2020) to deploy RE generation in developing countries to meet the climate change goals?

Synthesis of Round 2 Results

The synthesis for round 2 includes evaluating the rescored (reprioritized) ideas by the panel (figure 4.1, step 7). At this point, the administrators will need to determine whether there is sufficient convergence in prioritization of responses or whether further rounds are required. It is possible at this stage to discard ideas that no longer garner support. It is also possible to seek additional information related to any specific priorities that may be emerging.

In the RE evaluation, the feedback was broadly completed promptly. Two panelists required follow-up conversations for clarifications, which delayed obtaining all of the submissions by a couple of days. More important, there were no dropouts, as all panelists submitted completed questionnaires or templates (including the supplemental questionnaire). In a single case, a panelist inadvertently copied other members when submitting his responses. IEG had no reason to believe that there was undue influence as a result, since most panelists had already submitted their submissions by then. A quick review of the submissions confirmed that there was sufficient information and consensus in most of the submissions, and that the panel results could now be analyzed to formulate conclusions. The panelists were duly notified and thanked for sharing their expert opinions for the RE evaluation; without their commitment to RE and the evaluation, the Delphi process could not have been successful.

Additional Iterative Rounds of Feedback

Round 3 and any subsequent rounds will follow an iterative process similar to round 2, until a sufficient degree of consensus is developed among panelists’ predictions (figure 4.1, 8 and 9). Convergence may not be attainable, especially in the case of some new or contentious subjects, which itself is a finding (that there is a significant divergence as to its importance or prioritization). Successive rounds may be time consuming and costly, and may not bring about greater alignment of perspectives—important factors for administrators to consider. It is also possible that the design of the Delphi process or the way questions are phrased are key reasons for divergence of opinions (for example, panelists may have different interpretations of a question); in such circumstances, the Delphi process may need to be redesigned, although panelists may be reluctant to participate in a reconfigured effort.

In the RE evaluation, further iterative rounds were not needed because of the significant number of ideas that were generated through the process and the relatively clear convergence across the panel on members’ collective priorities. Therefore, it was decided to use the data on hand at the end of round 2 and proceed with analyzing results and drawing conclusions to be used in the RE evaluation, which are presented in the next chapter. The optional wrap-up meeting was also omitted.