Advanced Content Analysis: Can Artificial Intelligence Accelerate Theory-Driven Complex Program Evaluation?

4 | Can Artificial Intelligence Accelerate Theory-Driven Complex Program Evaluation?

This pilot study set out to test the applicability, usefulness, and added value of using AI for advanced theory-based content analysis within the framework of IEG’s thematic evaluations.

Our first learning goal was to assess whether SML can use text classification models to accurately replicate manual content analysis and to consider whether these models can perform analyses quickly and efficiently. Performance at predicting exact sublabels was modest and would require more time to optimize the model before being applied independently to new data sets. However, the high accuracy in predicting top-level categories suggests that the results achieved in this preliminary work could be improved. With some further work, the possibility of a text classifier model with coding accuracy that is acceptable when compared with expert coding is therefore promising.

The current approach requires significant effort on the part of subject matter experts to extract sections of text of interest ready for labeling. However, IEG’s new automated document section extraction routine could be used to overcome this manual step. Therefore, further research aimed at combining the document section extraction routine with the classifier model could bring the approach closer to automated label prediction and the goal of faster and more efficient analyses.

Beyond speed and efficiency, the exploratory data analysis and visualization capabilities used for SML offer valuable additional insights to complement traditional methods. The ability to identify and visualize unique characteristics within country programs could prove useful for informing program management decisions and evaluation designs. Contextual relevance of interventions and evaluation findings is a perennial concern, so being able to establish the distinctiveness of a country program could help determine the transferability and suitability of lessons learned.

The second learning goal was to determine if UML approaches could generate novel and important emergent insights from a large and rich data set. Overall, the topic model showed excellent performance in identifying inductive topics that were not only coherent and domain relevant but also novel and insightful.

At this stage of development, it is particularly promising that the AI was able to generate and provide evidence supporting hypotheses on good practices for international development. That these topics were statistically shown to be key predictors of project performance by IEG proves not only that the UML findings were robust and meaningful but also that this approach could provide important evidence for programmatic decision-making.

Although the UML approach will always require domain expertise from a human expert to validate and interpret the topic modeling results, it offers significant potential to complement traditional methods by providing an opportunity to scale inductive analyses to much larger data sets. The visualization methods could also help identify patterns that would not be obvious to a human analyst.

The final learning goal was to understand the potential of knowledge graphs to structure machine learning outputs according to a ToC and enable theory-based evaluation of program performance. Our preliminary investigations show that rule-based reasoning can be used to identify simple relationships among components of a knowledge schema representing a ToC. However, difficulties remain with multilabel modeling and the complexities of setting up a more granular and unified knowledge graph schema to comprehensively represent possible causal pathways.

In its current form, rule-based reasoning can provide only supporting quantitative information on project outcomes achievement. Therefore, further research is required before knowledge graphs can enable a theory-based evaluation of program performance.

An overarching limitation of all the AI approaches used in this pilot study is the significant front-end investment required to establish the analytical framework and prepare data for machine learning. Although this may be reduced by developing and integrating the approach with IEG’s document section extraction routine, the use of AI for theory-driven content analysis will currently only be worthwhile for larger-scale studies, particularly those that would benefit from being regularly updated. However, where organizations use standardized reporting templates, the initial investment could be leveraged by applying the SML and UML models to multiple portfolios with minimal tailoring.

Although cognizant of these limitations, we must remember that the findings presented in this white paper are part of only a small body of work exploring the use of AI for evaluation synthesis purposes. For such early-stage work, the preliminary results are promising, especially those for SML and UML technologies. Given the acceleration in the deployment and sophistication of AI technologies in other sectors, we may be optimistic about what the future holds for the use of AI technologies in the development evaluation sector.

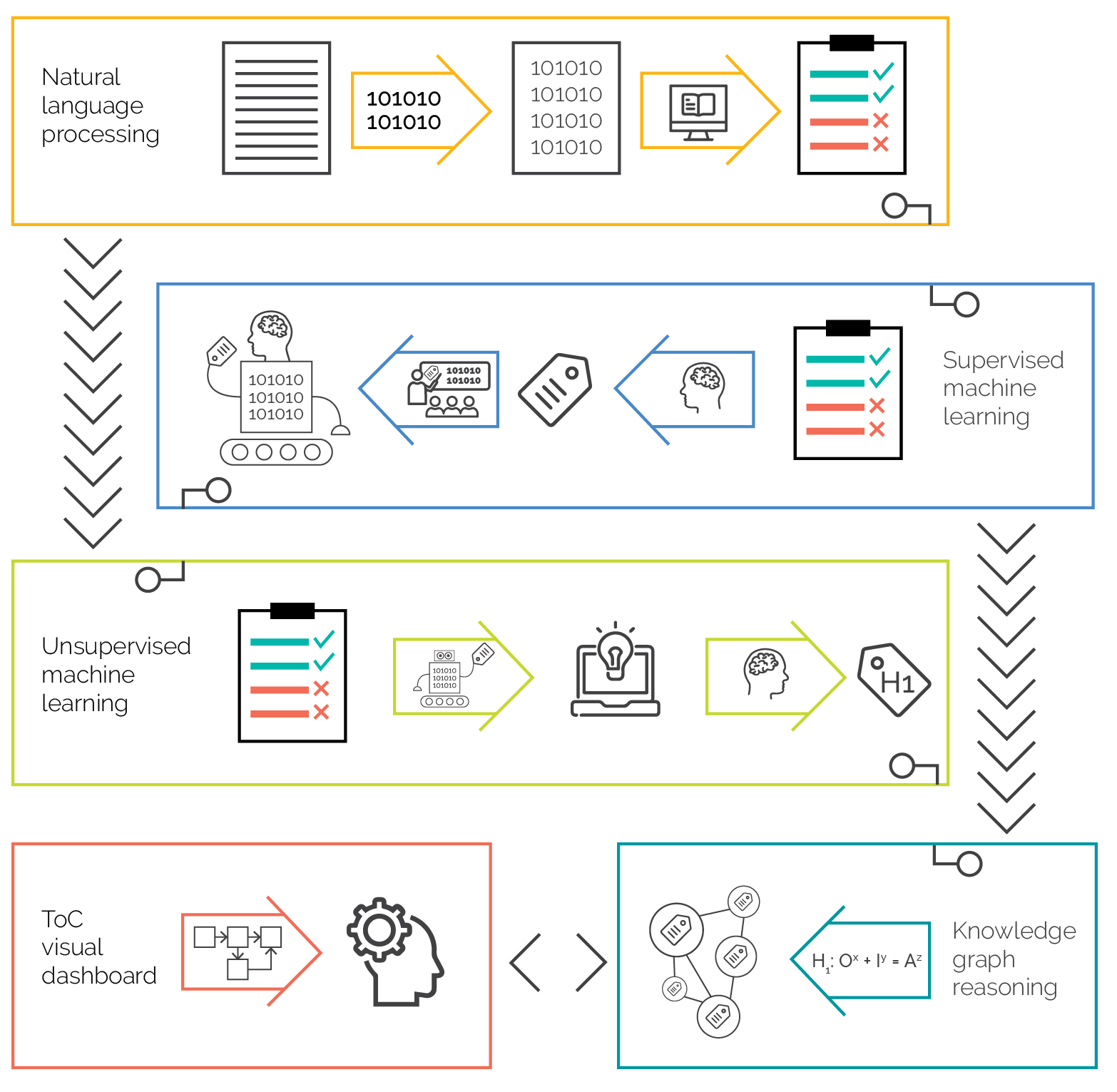

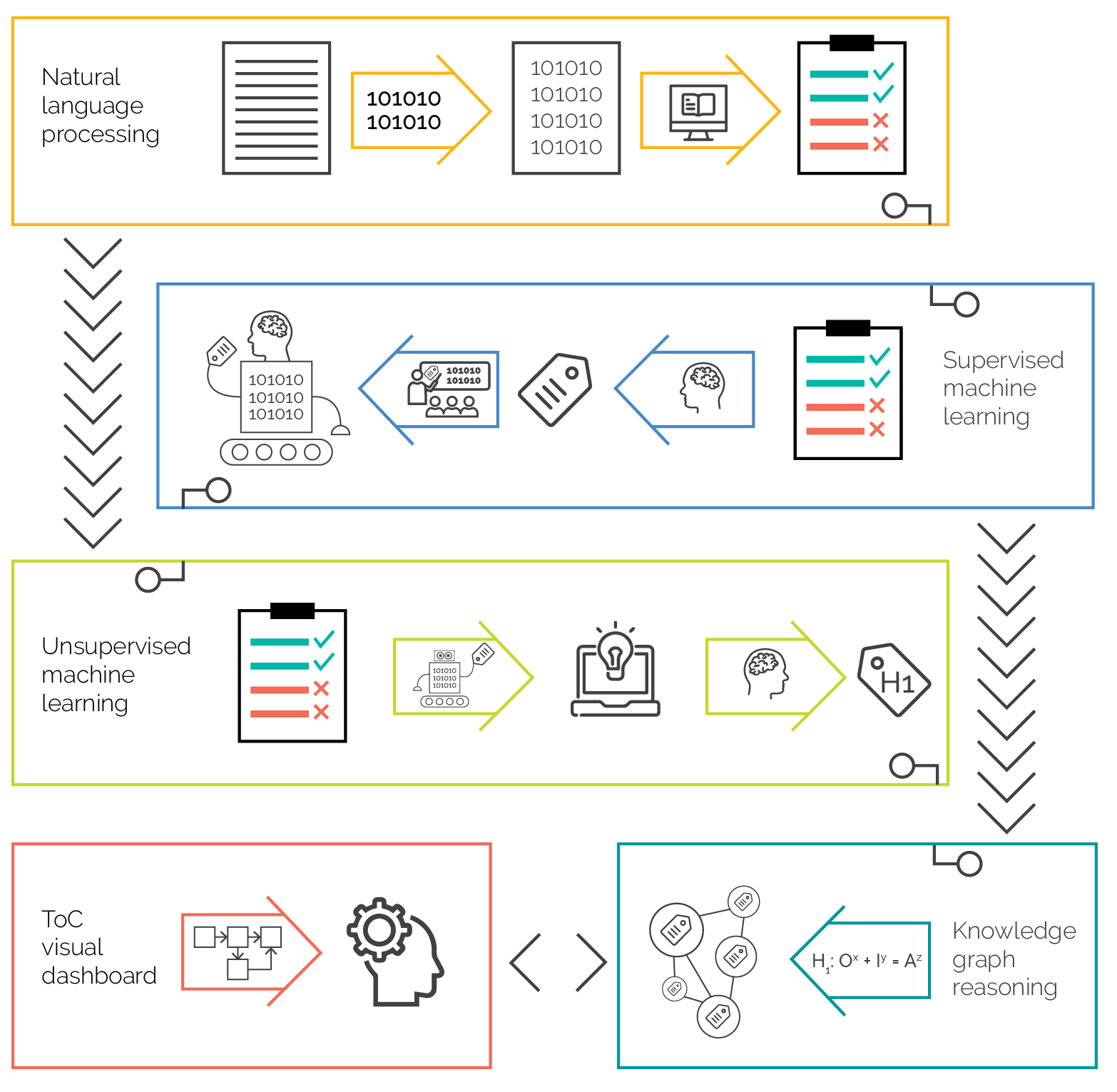

In this context, and to inform future research priorities, it is worthwhile to consider what a fully functional AI-enabled theory-driven content analysis might look like. Figure 4.1 provides a representation of the sequence and articulation of the multiple AI models and capabilities that need to be refined to maximize the potential applications of AI for theory-based portfolio analysis. First, the model would automate identification and extraction of text of interest from raw documents using NLP approaches, accurately predict deductive labels from extracted text using SML technologies (text classification model), identify emergent labels and patterns in the data using UML technologies (topic modeling), and then structure and interrogate the evidence using theory-based analysis in the form of a knowledge graph and Vadalog rule-based reasoning. The inclusion of a simple, engaging dashboard that could be used to easily drill down into the ToC could further improve the interpretability of the findings.

This paper represents one of the few and earliest investigations into this field and clearly lays out pathways for further research and refinement. If significant further research were to build on this work, development timelines could arguably be accelerated. Developments in the field of evaluation can be inspired by other sectors where AI technologies are already used for complex project management and risk quantification purposes (Sai-hung and Flyvbjerg 2020, Aldana et. al. 2021).

In conclusion, the results of this feasibility study show that NLP approaches can be usefully applied to theory-based content analysis and add significant value to complement existing synthesis methods. SML and UML are promising, particularly topic modeling and t-SNE visualization, and knowledge graphs can identify simple relationships in the data according to a conceptual framework. However, additional work is needed to automate document extraction, optimize text classification models, and develop more sophisticated knowledge graphs capable of analyzing more complex theories of change.

At their current stage of development, these AI technologies can be a useful tool for increasing the scope, speed, and depth of larger portfolio review processes. But further research addressing the limitations identified in this study could enable wider adoption of AI within the field of development evaluation and leverage big evaluation data.

Figure 4.1. Maximizing the Applications of Artificial Intelligence for Theory-Based Portfolio Analysis

Source: Independent Evaluation Group.

Note: The figure shows the sequence and articulation of the multiple artificial intelligence models and the capabilities that need to be refined to maximize the potential applications of artificial intelligence for theory-based portfolio analysis.