Advanced Content Analysis: Can Artificial Intelligence Accelerate Theory-Driven Complex Program Evaluation?

1 | The Challenge

Challenges in Development Evaluation and the Promise of Artificial Intelligence

The increasing use of systems approaches and adaptive management within development programming has led to growing demand for complexity-responsive evaluation methods (Bamberger, Raimondo, and Vaessen 2016). These methods must be able to meet the challenge of providing deeper insights into development project performance while working within the constraints of decreasing budgets and ever-increasing expectations for real-time evidence. In this context, the increasing volume and accessibility of evaluation evidence presents both a solution and a problem to resolving this challenge.

The innumerable data and countless evaluation reports currently available present an incredible opportunity for learning through evidence synthesis. But although current evaluation methods can be highly effective at generating both context-specific and transferable learning, the manual nature of traditional analyses preclude the comprehensive and systematic examination of big evaluation data. Evaluation practitioners simply cannot rigorously synthesize such large evidence bases. This results in missed opportunities in terms of the breadth and scale of learning that big evaluation data offers.

Artificial intelligence (AI) methodologies have revolutionized our ability to make sense of big data. If applied to the field of development evaluation, these innovations may offer much faster, more comprehensive, and systematic synthesis of evaluation data and reports. This could not only generate powerful learning opportunities for development practice but also be timely and cost-efficient. Automated analyses could be regularly updated and scaled as new data and evidence are generated, ensuring that the knowledge remains current and justifying the initial investment.

However, AI for evaluation purposes remains an emergent technology. Most work at this frontier has focused on applying AI to data collection, cleaning, and modeling for primary research (McKenzie 2018; Korenblum 2017; Bravo et al. 2021). There has been some pioneering work exploring the use of AI for evaluation synthesis, and the World Bank is beginning to use AI to identify portfolios of work and classify content such as risks (Bravo et al. 2021).1 Nevertheless, the use of AI for content synthesis and theory-driven analyses is limited. More experimentation and learning are required to establish the potential benefits of AI for evaluation purposes.

As the organization responsible for promoting innovation in evaluation analyses at the World Bank, the Independent Evaluation Group’s (IEG) Methods Advisory Function commissioned a study to test the feasibility and usefulness of using AI to support and accelerate its thematic and country-focused evaluations. These evaluations assess the performance of World Bank projects and activities that were provided over 5- to 10-year periods.

This white paper presents the results from the feasibility study and offers conclusions on the use of AI for evaluation syntheses and deepening evidence and learning on development project results. This white paper provides sufficient detail for a general overview of the technical methods used but is not intended to meet the higher experimental reproducibility standards typical of academic and related work. Further details are available in a separate technical report.

Could Artificial Intelligence Be Used to Accelerate Theory-Driven Complex Portfolio Evaluation?

The overall objectives of the feasibility study were to pilot and test the applicability, usefulness, and added value of using AI, including machine learning and knowledge graph methodologies, for advanced theory-based content analysis in the framework of IEG’s thematic evaluations. The pilot focused on a set of interventions from 64 countries that were within the scope of the IEG thematic evaluation The World Bank’s Support to Reducing Child Undernutrition (World Bank 2021). One pilot country from within this portfolio was selected as a test case for deeper analyses to determine if the AI methodologies could also support country-focused evaluations.

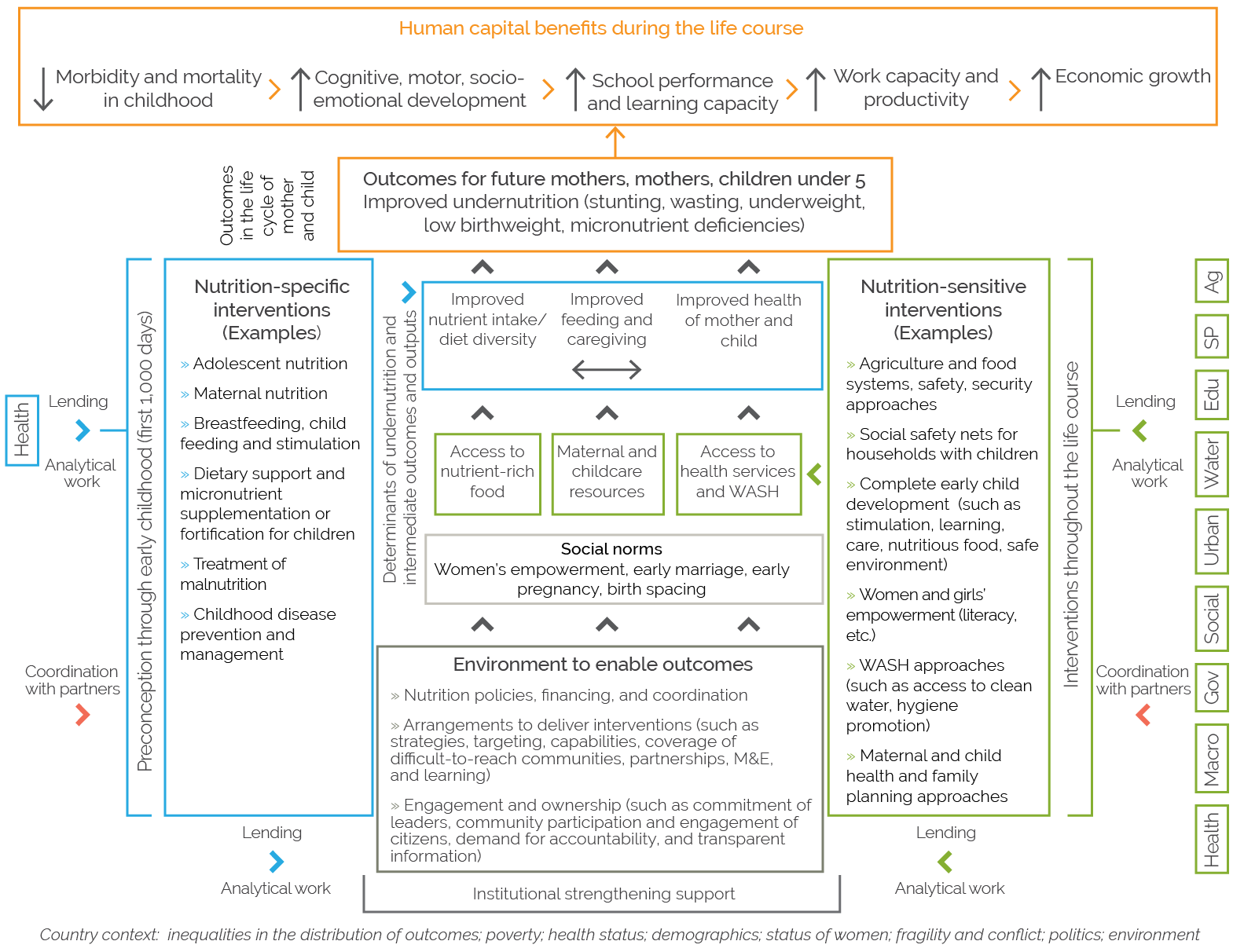

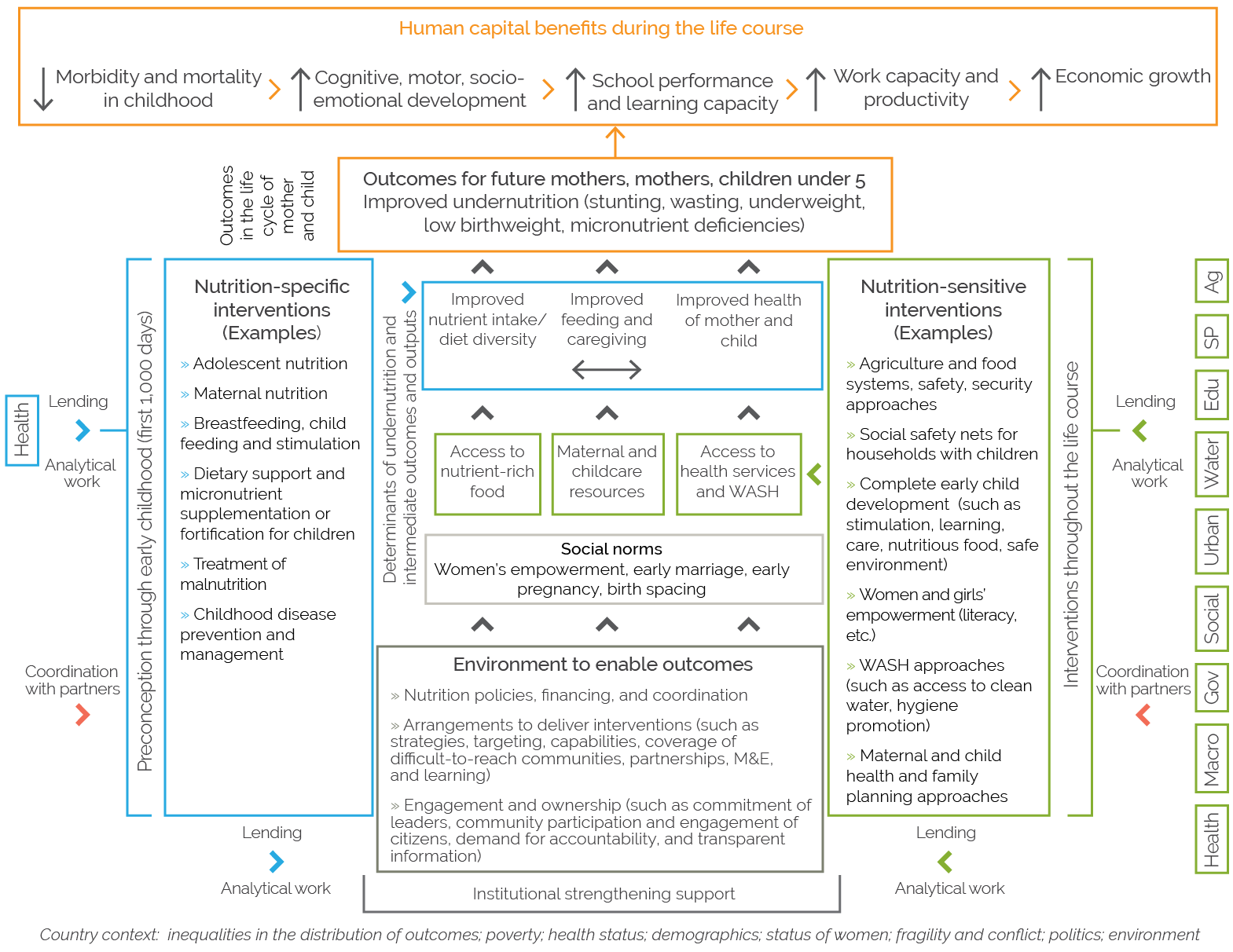

IEG evaluations typically use a predefined conceptual framework to enable a structured portfolio review. The conceptual framework maps out the latest approaches to improving outcomes in the thematic area in the form of a theory of change (ToC). The ToC presents a hypothesized results chain among intervention inputs, outputs, outcomes, and impacts, and factors considered important for intervention success.

The World Bank portfolio of activities and results are then compared with this ToC to determine whether World Bank support is consistent with best practice approaches, and whether these approaches have proven effective in the way intended. This kind of theory-driven approach is often used to determine program contribution when experimental methods are not possible.

For the AI methodologies to support and accelerate IEG evaluations, the pilot needed to determine if advanced content analysis using machine learning could identify intervention inputs, outputs, outcomes, impacts, and contributory factors, based on a conceptual framework developed by the IEG team. This conceptual framework is presented in figure 1.1. The pilot also sought to explore whether the AI methodologies, specifically knowledge graphs, could identify relationships among elements of the ToC as evidence for program contribution.

Figure 1.1. Conceptual Framework of Child Undernutrition

Source: Independent Evaluation Group, adapted from Maternal and Child Nutrition Study Group 2013 and UNICEF 1990.

Note: Ag = Agriculture; Edu = Education; Gov = Governance; Social = Social Development; Macro = Macroeconomic; M&E = monitoring and evaluation; SP = Social Protection WASH = water, sanitation, and hygiene.

- The Independent Evaluation Group’s evaluations are available at https://ieg.worldbankgroup.org/evaluations.