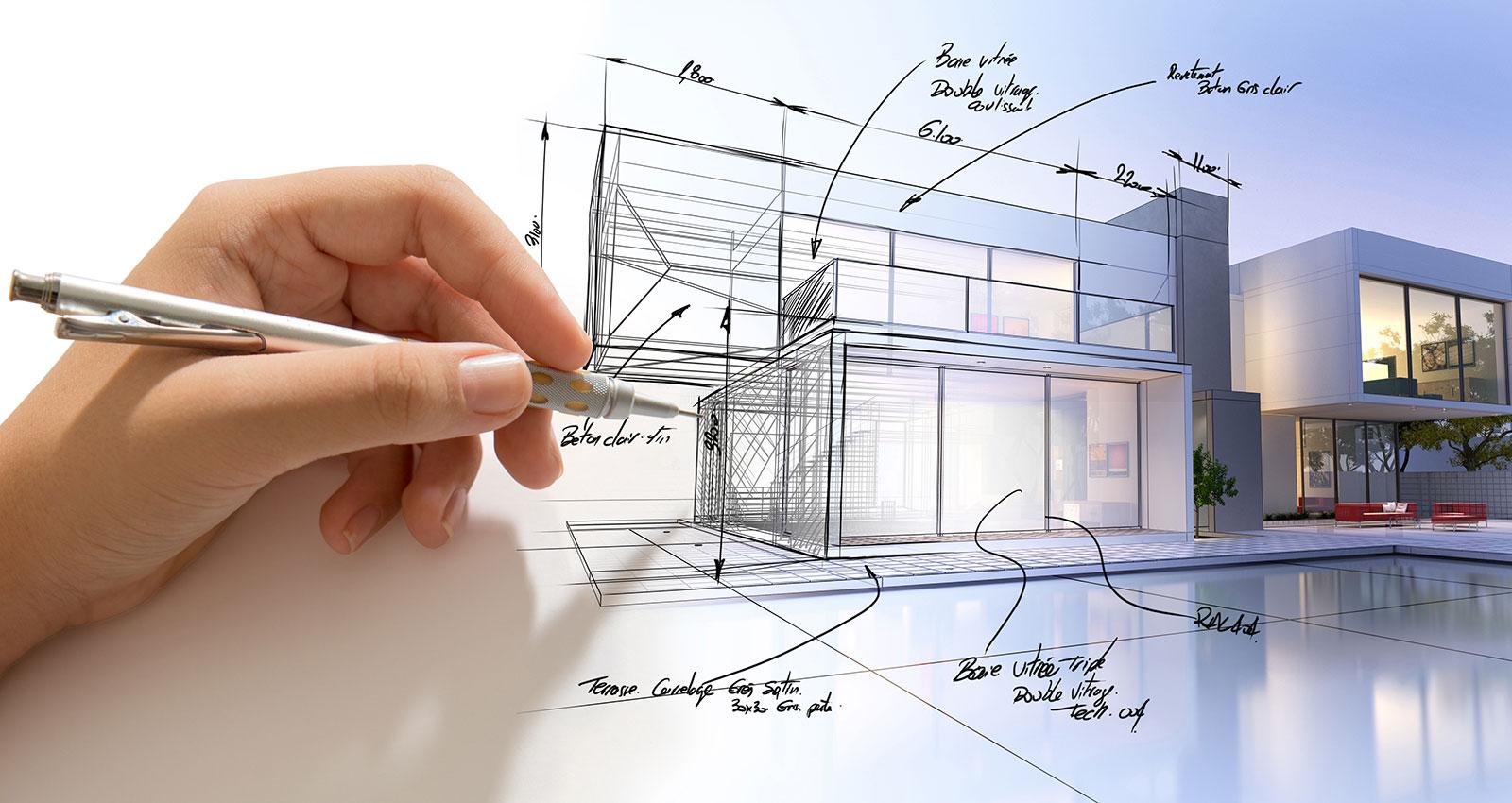

There is nothing quite like the excitement of starting the design of a new evaluation—at least that’s how we feel in the Methods Team at the Independent Evaluation Group (IEG). Much like how architects begin their work with a blank canvas to tackle a seemingly simple task hiding much complexity, evaluators start their work with a blank page to address seemingly simple questions hiding much complexity. Questions like “What interventions work, for whom, under what circumstances, and how?” motivate most IEG evaluations.

The answers to this question are complex and depend on (i) the nature of the interventions—for IEG this means assessing large portfolios of World Bank Group interventions that are multilevel, encompass multiple sites, and pursue outcomes that are often hard to measure; (ii) the nature of the system and institutional structures in which the interventions are embedded; and (iii) the nature of causality and how change takes place.

In IEG evaluations, these three aspects coalesce to turn seemingly simple questions into “wicked problems,” which, in turn, require elaborate methodologies to address them. To answer questions about complex interventions in equally complex contexts, we require evaluative approaches that span multiple sources of evidence, multiple methods to collect data and analyze them, and multiple perspectives to interpret the results.

Just like any good architectural design, a good methodological design requires creativity and collaboration, rigor, and pragmatism.

Creativity and collaboration

Fortunately, in this type of complex evaluative space, there is no ‘gold standard’ or ‘silver bullet’ methodology that could be replicated in all contexts. This where the art comes in. Much creativity and collaboration are essential for identifying the right design for a specific evaluation. This involves scouring sources of data for information and evidence that may be telling but less obvious initially. A blank canvas also allows evaluators to search across social science disciplines for useful theories, for promising methods to collect and analyze data, and for ways of combining them to bring out multiple perspectives and arrive at sound judgments.

We are only bound by our own imagination. New textual and imagery data, technologies, machine learning, and artificial intelligence (AI) are now abundantly available to bolster our capacities and to encourage us to find new ways to harness these options along with in-depth qualitative and quantitative inquiry.

This is why innovation is a core mandate of the IEG Methods Team. In partnership with IEG evaluation teams, we identify new opportunities for using data, applying innovative methods of inquiry, or mixing them in unique ways. The evaluation questions and intended use of the insights they deliver always drive the process, but a healthy dose of risk-taking and creative power is also involved. We have written blogs, made presentations, and created the Methods Paper Series to catalog some of our experiments and share what we learn along the way with clients, partners, and the global evaluation community.

Rigor

In architecture, art needs to be coupled with the rigor of science and construction techniques for the beautiful buildings to endure. In evaluation, the methodological scaffolding also needs to be robust, fully thought-through, and then implemented so that it can uphold the credibility of the evaluative enterprise.

Fortunately, in this instance, evaluators can rely on a rich set of cross-disciplinary rules and guidelines on how to theorize properly, how to conduct systematic social science inquiry, how to uphold standards of rigor in implementing the chosen methods, and how to triangulate multiple pieces of evidence to arrive at findings that are valid and reliable. This often boils down to being clear about the decisions that were made in implementing the methodology and transparency about the limitations of the data and methods, as well as the level of certainty and uniqueness of the evidence found.

Pragmatism

An architectural masterpiece will not see the light of day if it does not meet the clients' requirements of budget, space, and function. Similarly, a rigorous, innovative, and beautiful evaluation design is one that is fit for purpose. Under real-world evaluation conditions, this means the design is implemented in time to inform decision-making processes, is within budget, and is done in a way that yields insightful findings, practical lessons, and recommendations for a range of stakeholders. It also means that the methods employed, data used, and analytical techniques applied must arrive at results that can be translated into plain and easily communicated messages.

Pragmatism must be a core tenet of the type of methodological advice and support that the Methods Team provides to IEG colleagues. There is a craft to evaluation design—a know-how that balances innovation and risk-taking with a clear grasp of the types of skills, project management ability, team dynamics and composition, timeline, and budget it takes to realize the vision. It takes a diverse team with complementary skills to deliver an impactful evaluation, much like the way it takes a range of specialized skills to turn an architectural design into a building that is both beautiful and solid.

For more on IEG’s innovative approach to methods, please see:

Add new comment